Massive Datasets & Generalization in ML

Summary: Big, publically available datasets are great. Yet many practitioners who seek to use models pretrained on outside data need to ask themselves how informative the data is likely to be for their purposes. “Dataset bias” and “task specificity” are important factors to keep in mind.

As I read deep learning papers these days, I am occasionally struck by the staggering amount of data some researchers are using for their experiments. While I typically work to develop representations that allow for good performance with less data, some scientists are racing full steam ahead in the opposite direction.

It was only a few years ago that we thought the ImageNet 2012 dataset, with 1.2 million labeled images, was quite large. Only six years later, researchers from Facebook AI Research (FAIR) have dwarfed ImageNet 2012 with a 3-billion-image dataset comprised of hashtag-labeled images from Instagram. Google’s YouTube-8M dataset, geared towards large-scale video understanding, consists of audio/visual features extracted from 350,000 hours of video. Simulation tools have also been growing to incredible sizes; InteriorNet is a simulation environment consisting of 22 million 3D interior environments, hand-designed by over a thousand interior designers. And let’s not forget about OpenAI either, whose multiplayer-game-playing AI is trained using a massive cluster of computers so that it can play 180 years of games against itself every day.

The list goes on.

In a blog post from May 2018, researchers from OpenAI have estimated that the amount of computation being used in the largest AI research experiments, like AlphaGo and Neural Architecture Search, is doubling every 3.5 months, and I suspect data usage is no different. Whenever machine learning performance starts to saturate, researchers develop a bigger or more complex model that is capable of consuming more data. For certain tasks, we may never completely saturate.

So to what extent is all this data useful? For most other domains, particularly those requiring real-world data, the aim is to collect data that will be useful for training other tasks: i.e. that models trained on these massive datasets will transfer (or generalize) well to similar tasks and other datasets. For tasks involving video games, the test and training environments are by-definition identical and data is rather easy to generate without human intervention, and so more data is clearly beneficial. Yet for the real world, large datasets provided by big tech companies or used for machine learning benchmark competitions are rarely identical to the data your algorithm will see in the wild.

In addition to simple generalization, Transfer Learning is also often done, in which a secondary, usually smaller, dataset to fine-tune the already-trained machine learning model. Generalization and transfer learning are distinct, but related. I discuss them here rather interchangeably.

In addition to simple generalization, Transfer Learning is also often done, in which a secondary, usually smaller, dataset to fine-tune the already-trained machine learning model. Generalization and transfer learning are distinct, but related. I discuss them here rather interchangeably.

As the performance of our models have increased in recent years, questions regarding the quality and the specificity of the datasets we use for pretraining have become similarly more important.

When faced with a task to automate, you might hope that data on a related task is good enough for the task at hand: e.g. detecting dune-buggies with an object detection neural network trained to detect cars. So how well should we expect a particular model trained on a particular dataset to generalize or transfer to your task? At least for the forseable future, the gold standard answer is try it and see how well it works. Yet, even so, there are a few factors one can keep in mind when using an off-the-shelf dataset.

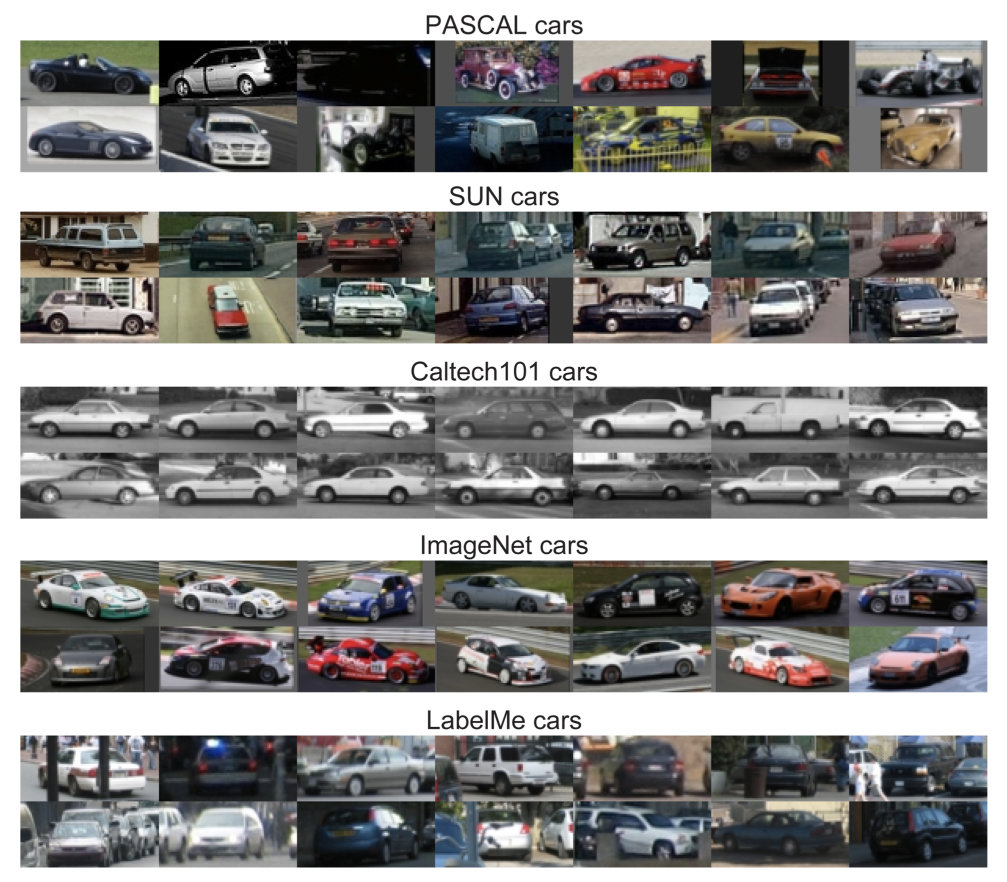

One of these factors is dataset bias. Since bias must typically be evaluated with respect to the specific task, it is unlikely that any publically available dataset is truly unbiased for your needs. For example, if you are hoping to train a generic car detector, any dataset older than a few years may be less effective on more recent vehicles. Data bias is notoriously difficult to quantify, yet for some types of data, like images, human evaluation is possible. In a 2011 paper Unbiased Look at Dataset Bias computer vision researchers Antonio Torralba (MIT) and Alexei A. Efros (CMU) introduced The Dataset Test in which participants (and, later, computer vision algorithms) are asked to identify from which dataset images of cars are drawn from; both the humans and the algorithms performed much better than random chance on most datasets.

Dataset bias has become an even more important concern as we see more AI algorithms “in the wild”, impacting the lives of non-researchers in potentially problematic ways. I will not dive deep on this topic here, but I highly recommend you watch Kate Crawford’s NIPS 2017 Keynote: The Trouble with Bias.

Dataset bias has become an even more important concern as we see more AI algorithms “in the wild”, impacting the lives of non-researchers in potentially problematic ways. I will not dive deep on this topic here, but I highly recommend you watch Kate Crawford’s NIPS 2017 Keynote: The Trouble with Bias.

Even models trained on ImageNet are biased towards a certain type of image collection and labeling methodology which may not totally reflect the types of images that you will see in production. Fortunately, recent work from researchers at Google Brain suggests that there is a positive correlation between performance on ImageNet and transfer learning ability to most other image classification tasks. There were, however a few caveats:

Additionally, we find that, on two small fine-grained image classification datasets, pretraining on ImageNet provides minimal benefits, indicating the learned features from ImageNet do not transfer well to fine-grained tasks. Together, our results show that ImageNet architectures generalize well across datasets, but ImageNet features are less general than previously suggested.

This brings me to the other factor to keep in mind when using pretrained models: task specificity. Even models pretrained on a rather general dataset like ImageNet may not perform much better than randomly initialized systems when the task is rather specific. Even for rather general tasks, learned features for one task are not necessarily useful for another. This was a point of note in the Limits of Weakly Supervised Pretraining paper from FAIR. The researchers discovered that optimizing their pretraining to better predict whole-image labels had a tradeoff during fine-tuning for object localization tasks. In fact, it is entirely possible that the structure of the model itself is not very well-suited to your new task. Some recent work entitled Do CIFAR-10 Classifiers Generalize to CIFAR-10? has shown that we are starting to overfit our model architectures to the test data for a popular image classification benchmark.

Perhaps unsurprisingly, having the right data is the most straightforward way to ensure that your machine learning system reaches peak performance on your task. Even as the size of public datasets continues to grow, for many applications, there is often no substitute for collecting and annotating your own data.

As always, I welcome discussion in the comments below or on Hacker News. Feel free to ask questions, or let me know of some research I might be interested in.

References

- Dhruv Mahajan, Ross Girshick, Vignesh Ramanathan, Kaiming He, Manohar Paluri, Yixuan Li, Ashwin Bharambe & Laurensi van der Maaten, Exploring the Limits of Weakly Supervised Pretraining, in: European Conference on Computer Vision (ECCV), 2018.

- Wenbin Li, Sajad Saeedi, John McCormac, Ronald Clark, Dimos Tzoumanikas, Qing Ye, Yuzhong Huang, Rui Tang & Stefan Leutenegger, InteriorNet: Mega-scale Multi-sensor Photo-realistic Indoor Scenes Dataset, in: British Machine Vision Conference (BMVC), 2018.

- Dario Amodei & Danny Hernandez, AI and Compute, 2018. Blog post link

- Antonio Torralba & Alexei A Efros, Unbiased Look at Dataset Bias, in: Computer Vision and Pattern Recognition (CVPR), 2011.

- Simon Kornblith, Jonathon Shlens & Quoc V Le, Do Better ImageNet Models Transfer Better?, arXiv preprint arXiv:1805.08974, 2018.

- Benjamin Recht, Rebecca Roelofs, Ludwig Schmidt & Vaishaal Shankar, Do CIFAR-10 Classifiers Generalize to CIFAR-10?, arXiv preprint arXiv:1806.00451, 2018.