DeepMind's AlphaZero and The Real World

AlphaZero is incredible. If you have yet to read DeepMind’s blog post about their recent paper in Science detailing the ins and outs of their legendary game-playing AI, I recommend you do so. In it, DeepMind’s scientists describe an intelligent system capable of playing the games of Go, Chess, and Shogi at superhuman levels. Even legendary chess Grandmaster Garry Kasparov says the moves selected by the system demonstrate a “superior understanding” of the games. Even more remarkable is that AlphaZero, a successor to the well-known AlphaGo and AlphaGo Zero, is trained entirely via self-play — it was able to learn good strategies without any meaningful human input.

So do these results imply that Artificial General Intelligence is soon-to-be a solved problem? Hardly. There is a massive difference between an artificially intelligent agent capable of playing chess and a robot that can solve practical real-world tasks, like exploring a building its never seen before to find someone’s office. AlphaZero’s intelligence derives from its ability to make predictions about how a game is likely to unfold: it learns to predict which moves are better than others and uses this information to think a few moves ahead. As it learns to make increasingly accurate predictions, AlphaZero gets better at rejecting “bad moves” and is able to simulate deeper into the future. But the real world is almost immeasurably complex, and, to act in the real world, a system like AlphaZero must decide between a nearly infinite set of possible actions at every instant in time. Overcoming this limitation is not merely a matter of throwing more computational power at the problem:

Using AlphaZero to solve real problems will require a change in the way computers represent and think about the world.

Yet despite the complexity inherent in the real world, humans are still capable of making predictions about how the world behaves and using this information to make decisions. To understand how, we consider how humans learn to play games.

Human Decision Making and Structured Knowledge

Humans are actually pretty good at playing games like Chess and Go, which is why outperforming humans at these games have historically marked milestones for progress in AI. Like AlphaZero, humans develop an intuition for how they expect the game to evolve. Expert human players use this intuition to prefer likely moves and configurations of the world and to inform better decision making.

The conclusion that experts rely more on structured knowledge than on analysis is supported by a rare case study of an initially weak chess player, identified only by the initials D.H., who over the course of nine years rose to become one of Canada’s leading masters by 1987. Neil Charness, professor of psychology at Florida State University, showed that despite the increase in the player’s strength, he analyzed chess positions no more extensively than he had earlier, relying instead on a vastly improved knowledge of chess positions and associated strategies.

The game of Chess is often analyzed in terms of abstract board positions and Chess openings/endgames are also frequently taught as groups of moves, as in the Rule of the Square. Rather than thinking of individual moves, people are capable of thinking in terms of sequences of moves, which allows them to think about the board deeper into the future, once the sequence is executed. Expert players learn to develop an abstract representation of the board, in which fine-grained details may be ignored. Imagining the impact of moves and move sequences becomes a question of how the overall structure of the board will change, rather than the precise positions of each of the pieces.

It could be argued that AlphaZero is also capable of recognizing abstract patterns, since it too is capable of making predictions about who is likely to win. However, AlphaZero is structured such that it must explicitly construct future states of the board when imagining the future and cannot learn to plan completely in the abstract.

It could be argued that AlphaZero is also capable of recognizing abstract patterns, since it too is capable of making predictions about who is likely to win. However, AlphaZero is structured such that it must explicitly construct future states of the board when imagining the future and cannot learn to plan completely in the abstract.

How does this ability to think in the abstract translate to decision-making in the real world? Humans do this all the time. As I write this, I am at my computer desk in my apartment. If the fire alarm were to go off, indicating that I should leave the building, my instinct is to head towards my apartment door and go down the hall to the stairs, which will then bring me outside. By contrast, a robot might start planning one step at a time, expending enormous computational effort to decide whether walking should begin with the left or right foot, a decision has little bearing on the overall solution.

For a robot, the planning problem becomes even more complicated when it finds itself in an unfamiliar building. The robot can no longer compute a precise plan, since planning a collision-free trajectory is effectively impossible when the locations of all the obstacles are unknown. Humans are capable of overcoming the computational issues that hold back machines because we think in the abstract. I know that bathrooms are likely to be dead ends, while hallways usually are not. If my goal is to leave the building, entering a bathroom is probably unwise.

So how can we encourage a system like AlphaZero to think in terms of these human-like abstract actions?

Abstract Actions and AlphaZero

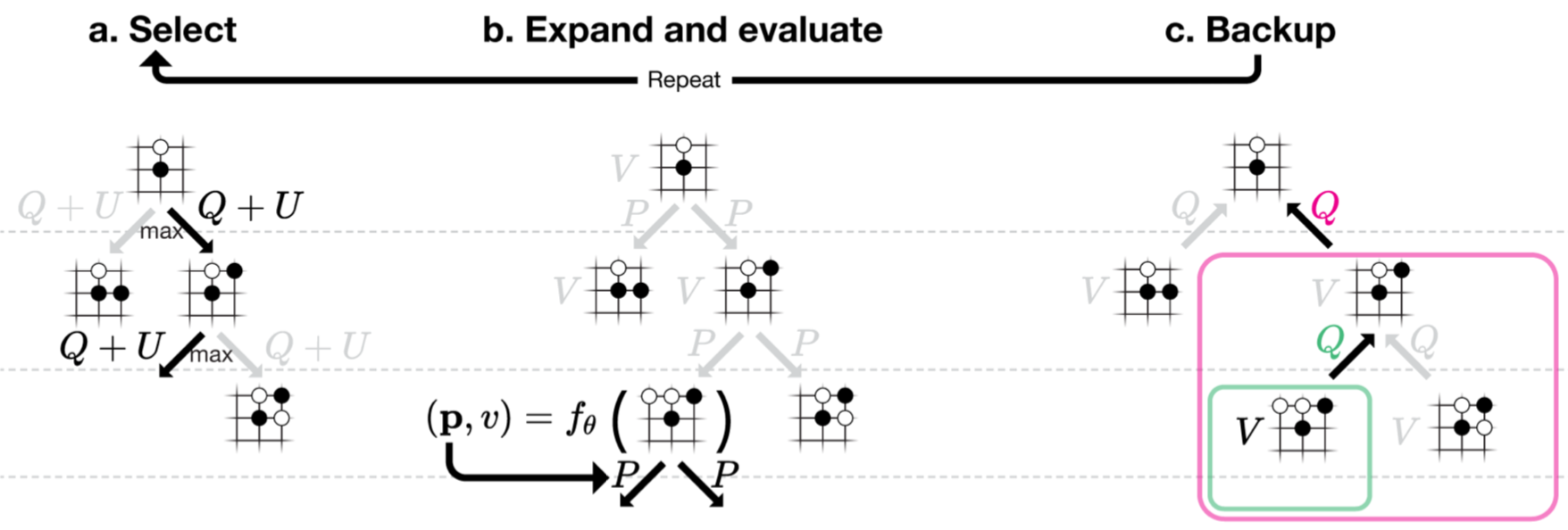

Understanding how to marry abstract decision-making and AlphaZero requires looking at how AlphaZero works. To make decisions, AlphaZero needs to be able to forward simulate the game: it knows exactly what the board will look like after it makes a move, and what the game will look like after the opponent makes their move, and so on.

For games like Chess and Go, expanding this tree of moves is trivial. In the real world, however, this same process is extremely difficult, even for an AI. If you don’t believe me, take a look at some of the recent progress in video frame prediction, which aims to predict the most likely next few frames of a video. The results are often pretty reasonable, but are often oddly pixelated and are (for now) easily distinguishable from real video. In this problem, the machine learning algorithm has a pretty tough time making predictions more than a few frames into the future. Imagine instead that the robot were to try to predict the likelihood of every possible future video frame for hundreds of frames into the future. The problem is so complex that even generating the data we would need to train such an algorithm is hopelessly difficult.

If we instead use an abstract model of the world, imagining the future becomes much easier. I no longer care exactly what the world looks like, but instead try to make predictions about the types of things that can happen. In the context of my building exit problem, I should not have to imagine what color tile a bathroom has or precisely how large it is to understand that if I enter a bathroom while trying to leave a building, I will likely have to turn around and exit it again first.

Framed this way, abstractions allow us to represent the world as if it were a chess board: the moves correspond to physically moving between rooms or hallways or leaving the building. Equipped with an abstract model of the world, a system like AlphaZero can learn to predict how the world will evolve in terms of these abstract concepts. Using this abstract model of the world to make decisions is therefore relatively straightforward for a system like AlphaZero: the number of actions (and subsequent outcomes) is vastly reduced, allowing us to use familiar strategies for decision-making.

There are still practical challenges associated with using abstractions during planning that have thus far limited AI, like AlphaZero, from using them in general. I discuss these later.

There are still practical challenges associated with using abstractions during planning that have thus far limited AI, like AlphaZero, from using them in general. I discuss these later.

Navigation in Unknown Environments

In fact using abstract models for planning is often much simpler then creating abstract models. So where do abstract models come from?

Disclaimer: This section contains work my colleagues and I have done. While our work does not realize an AlphaZero for the real world, we present ideas that are useful for understanding how one might construct such a system.

Disclaimer: This section contains work my colleagues and I have done. While our work does not realize an AlphaZero for the real world, we present ideas that are useful for understanding how one might construct such a system.

To begin to answer this question, we can limit the scope of our inquiry to a simpler problem: navigation in an unknown environment. Imagine that a robot is placed in the middle of a university building and capable of detecting the local geometry of the environment: i.e. walls and obstacles. The robot’s (albeit simple) goal is to reach a point that’s roughly 100 meters away in a part of the building it cannot see.

When faced with this task, what does a simple algorithm do? Most robots avoid the difficulties associated with making predictions about the unknown part of the building by ignoring it. The robot plans as if all unknown space is free space. Naturally, this assumption is a poor one. A robot using this strategy to navigate constantly enters people’s offices in an effort to reach the goal only to “discover” that many of these were dead ends. It often needs to retrace its steps and return to the hallway before it can make further progress towards the goal. This video shows a simulated robot doing just that:

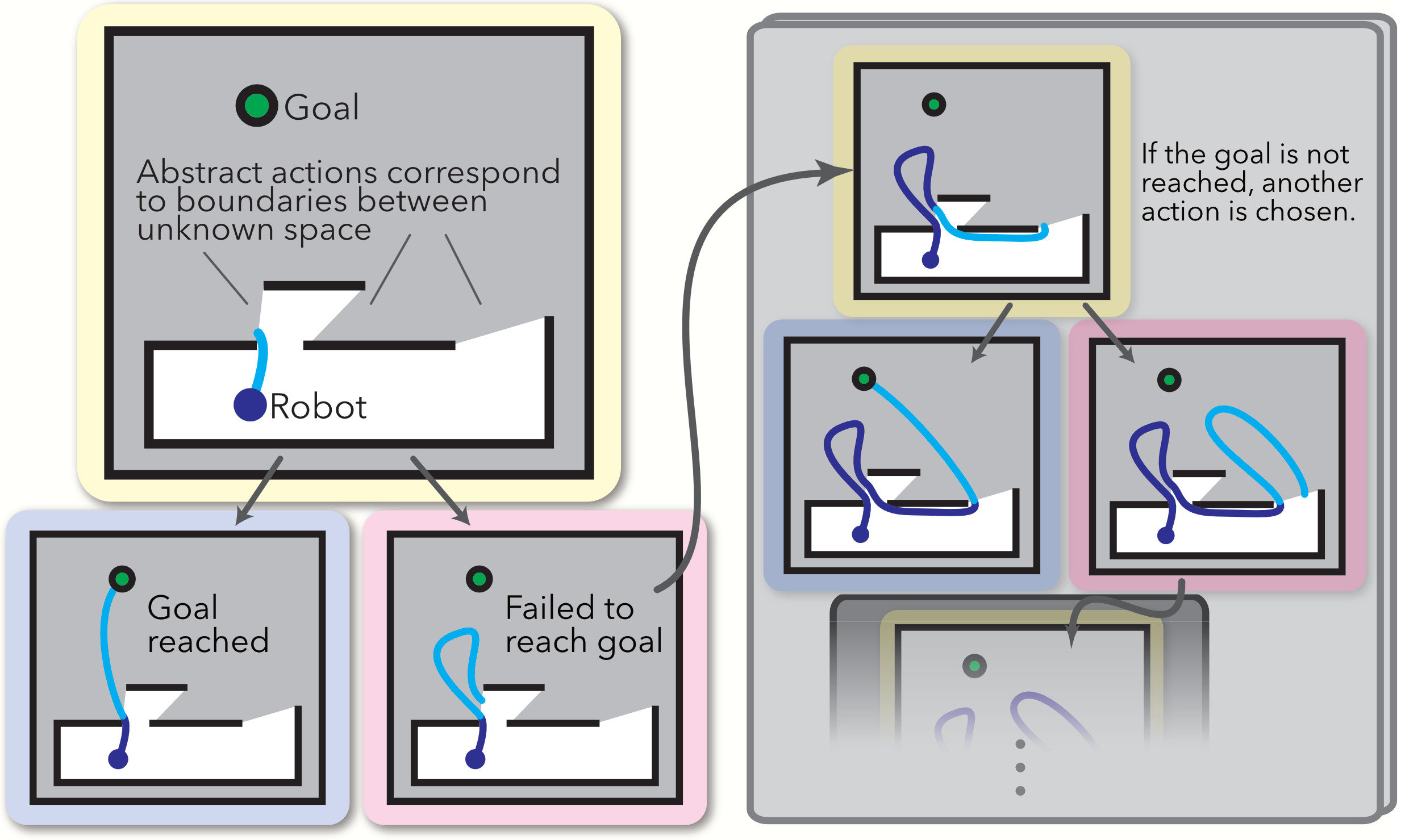

Instead of planning like this, we would like to develop an abstract representation of the world so that the robot can make better decisions. As the robot builds its map of the world, boundaries between free space and unknown space appear whenever part of the map is occluded by obstacles or walls. We use each one of these boundaries, or frontiers, to represent an abstract action: an action consists of the robot traveling to the selected boundary and exploring the unknown space beyond it in an effort to reach the goal. In our model, there are two possible “outcomes” from executing an action: (1) we reach the goal and planning terminates, or (2) we fail to reach the goal and must select a different action.

Using machine learning, we estimate the likelihood that each boundary leads to the goal, which allows us to better estimate how expensive each move will be. Deciding which action to take involves a tree search procedure similar to that of AlphaZero, we simulate trying each action and its possible outcomes in sequence and select the action that has the lowest expected cost. On a toy example, the procedure looks something like this:

This procedure allows us to use our abstract model of the world for planning. Using our procedure, in combination with the learned probability that each boundary leads to the goal, our simulated robot reaches the goal much more quickly than in the example above:

Challenges

As we try to deploy robots and AI systems to solve complex, real-world problems, it has become increasingly clear that our machine learning algorithms can benefit from being designed so that they mirror the structure of the problem they are tasked to solve. Humans are extremely good at using structure to solve problems:

Humans’ capacity for combinatorial generalization depends critically on our cognitive mechanisms for representing structure and reasoning about relations. We represent complex systems as compositions of entities and their interactions, such as judging whether a haphazard stack of objects is stable. We use hierarchies to abstract away from fine-grained differences, and capture more general commonalities between representations and behaviors, such as parts of an object, objects in a scene, neighborhoods in a town, and towns in a country. We solve novel problems by composing familiar skills and routines […] When learning, we either fit new knowledge into our existing structured representations, or adjust the structure itself to better accommodate (and make use of) the new and the old.

In the previous section, we developed an abstract model of the world specifically tailored to solving a single problem. While our model — which uses boundaries between free space and the unknown to define abstract actions — is effective for the task of navigation, it would certainly be useless if the robot were instead commanded to clean the dishes. It remains an open question how to construct an artificial agent that can “adjust the structure itself to better accommodate” new data or tasks.

While our work is certainly a practical step towards realizing an AlphaZero for the real world, we still have a lot to learn. Where do good abstractions come from? What makes one abstraction better than another? How can abstractions be learned?

As always, I welcome discussion in the comments below or on Hacker News. Feel free to ask questions, share your thoughts, or let me know of some research you would like to share.

References

- Gregory J. Stein, Christopher Bradley & Nicholas Roy, Learning over Subgoals for Efficient Navigation of Structured, Unknown Environments, in: Conference on Robot Learning (CoRL), 2018.

- Philip E. Ross, The Expert Mind, Scientific American, 2006.

- David Silver et al., Mastering the game of Go without human knowledge, Nature, 2017.

- Mohammad Babaeizadeh, Chelsea Finn, Dumitru Erhan, Roy H. Campbell & Sergey Levine, Stochastic Variational Video Prediction, in: International Conference on Learning Representations, 2018.

- David Silver et al., A general reinforcement learning algorithm that masters Chess, Shogi, and Go through self-play, Science, 2018.

- Peter W. Battaglia et al., Relational inductive biases, deep learning, and graph networks, arXiv preprint arXiv:1806.01261, 2018.

I would also like to extend my thanks to my coauthor Chris Bradley and the members of the Robust Robotics Group at MIT, who provided constructive feedback on this article.