For AI, translation is about more than language

Just over a two weeks ago, NVIDIA showcased vid2vid, their new technique for video-to-video translation. Their paper shows off a number of different applications including one particularly striking example in which the researchers automatically convert sketchy outlines of vlog-style videos from YouTube into compellingly realistic videos of people talking to the camera. The results are incredible and really need to be seen to be believed:

When most people hear the term “translation” they think of translating natural language: e.g. translating text or speech from Mandarin to English. Today I want to reinforce the idea that translation can be applied to different types of data beyond language. The vid2vid paper I mentioned above is just the latest and most visually striking example of the transformative power of AI, and modern machine learning is making incredibly rapid progress in this space.

Machine learning is, of course, an incredibly powerful tool for language translation. Recently, researchers from Microsoft achieved human-level translation performance on translating news articles from Mandarin to English.

Machine learning is, of course, an incredibly powerful tool for language translation. Recently, researchers from Microsoft achieved human-level translation performance on translating news articles from Mandarin to English.

In the remainder of this article, I will cover:

- A brief definition of “translation” in the context of AI;

- An overview of how modern machine learning systems tackle translation;

- A list of application domains and some influential research for each.

What is “Translation”?

At the crux of translation is the idea of underlying meaning. For instance, the two sentences Je suis heureux and I am happy both convey the same information in their respective languages. To say that a translation is a “good translation” is to say that it preserves the meaning of the sentence when it is converted from one language to another.

More generally, translation is about expressing the same underlying information in different ways.

Translation is not at all limited to language: translation from one image “language” to another has been an area of active research in computer vision for the last few years. Though many problems in computer vision, like edge-detection, can be thought of as “translation”, only recently have modern deep learning techniques enabled image-to-image translation that are capable of relating color, texture, and even style between different groups of images. This more-modern notion of image-to-image translation is best summarized in the Introduction to the fantastic CycleGAN paper:

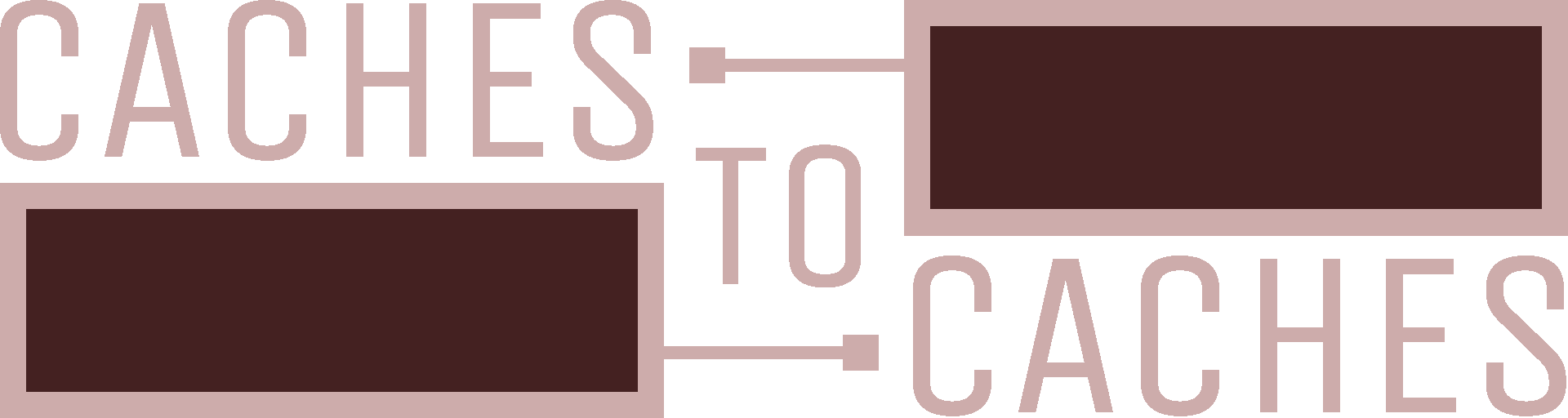

What did Claude Monet see as he placed his easel by the bank of the Seine near Argenteuil on a lovely spring day in 1873? A color photograph, had it been invented, may have documented a crisp blue sky and a glassy river reflecting it. Monet conveyed his impression of this same scene through wispy brush strokes and a bright palette.

What if Monet had happened upon the little harbor in Cassis on a cool summer evening? A brief stroll through a gallery of Monet paintings makes it possible to imagine how he would have rendered the scene: perhaps in pastel shades, with abrupt dabs of paint, and a somewhat flattened dynamic range.

We can imagine all this despite never having seen a side by side example of a Monet painting next to a photo of the scene he painted. Instead we have knowledge of the set of Monet paintings and of the set of landscape photographs. We can reason about the stylistic differences between these two sets [of images], and thereby imagine what a scene might look like if we were to “translate” it from one set into the other.

Of course, few translations are perfect. In language, idioms or experiences unique to a particular culture have meaning that may be difficult to express in other languages. The subtleties of precisely evaluating translation quality is worth a mention, but is something I will largely avoid for the remainder of the article.

How does AI translation work?

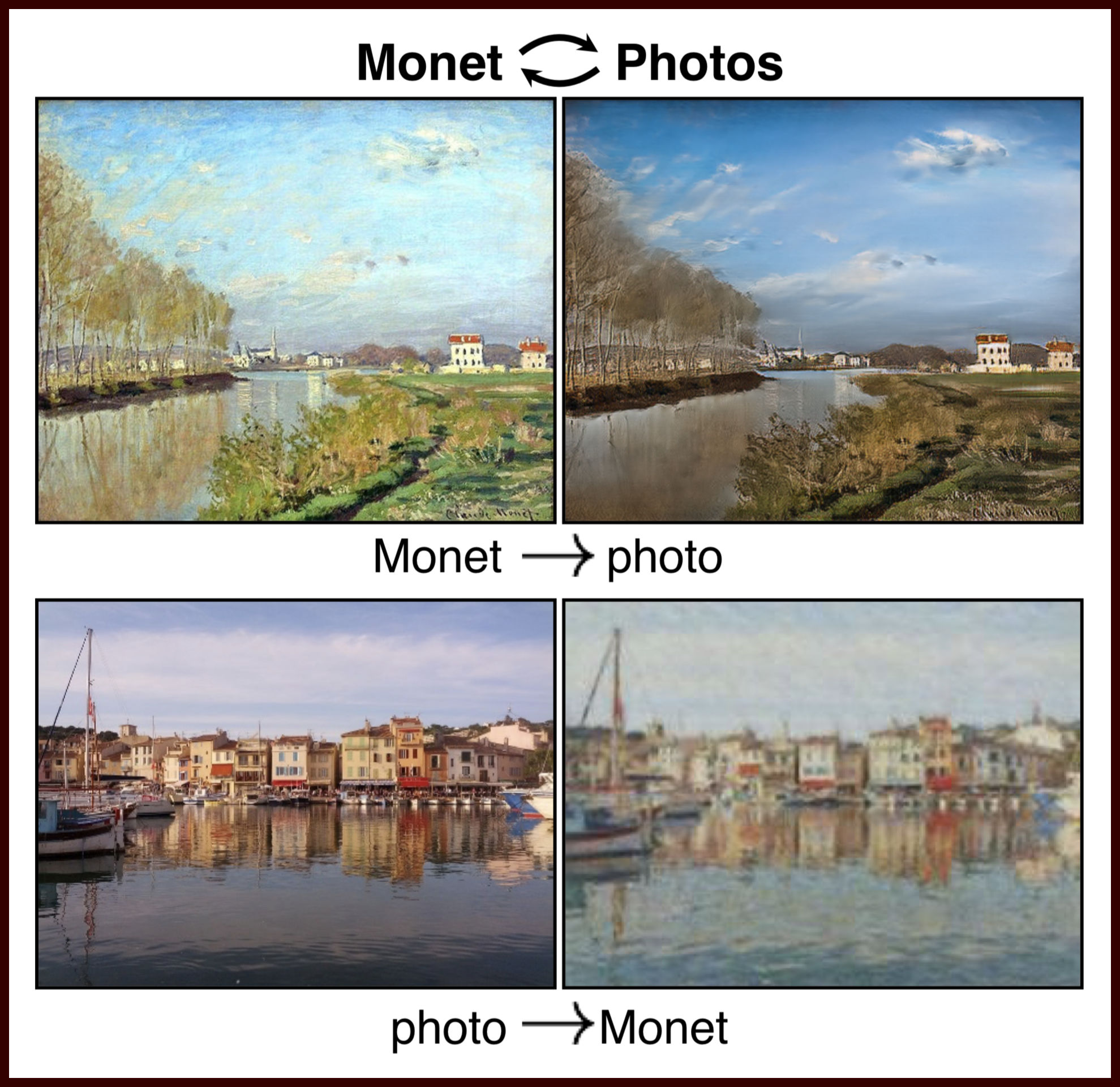

Most of the translation systems I will discuss in this article have a similar structure, known as an encoder-decoder architecture. Imagine that you, while on vacation, observe a beautiful vista overlooking the ocean, and decide that you would love friend of yours to reproduce the scene in the style of your favorite painter. However, your camera is broken, so you instead decide to send her a letter describing the scene. The process of writing the letter is the encoding phase, in which you try to come up with a short list of salient details which you think will be sufficient for painting the scene. In so doing, you may leave out some minutiae, like the exact texture of the rocks nearby or the shapes of all the clouds. Upon receiving the letter, your friend will have to decode your message and paint the picture. It is also common to refer to your letter as the embedded representation of the image, the intermediate data passed between the encoder and decoder.

In most cases, the encoder-decoder architecture is lossy, meaning that some information is lost in the translation process. However, recent research suggests that this structure is actually what enables many modern machine learning system to perform as well as they do.

In most cases, the encoder-decoder architecture is lossy, meaning that some information is lost in the translation process. However, recent research suggests that this structure is actually what enables many modern machine learning system to perform as well as they do.

For a neural network, everything is typically learned, including both the encoder and decoder blocks and even the embedding space. While the illustrative example I describe above relies on you and your friend communicating via language, there is rarely any such constraint in machine learning systems. As the machine learning systems are trained, they typically learn to preserve the macroscopic features of the input, like the location of mountain ranges or the height of the sun in the sky, while throwing out details like color and texture. It is then the responsibility of the decoder to use it’s learned experience to produce the output image in the desired style.

Among the key enablers of this technology are Generative Adversarial Networks, which are used to train many of the image-based machine learning systems I discuss throughout the article.

Among the key enablers of this technology are Generative Adversarial Networks, which are used to train many of the image-based machine learning systems I discuss throughout the article.

Some different types of automated translation

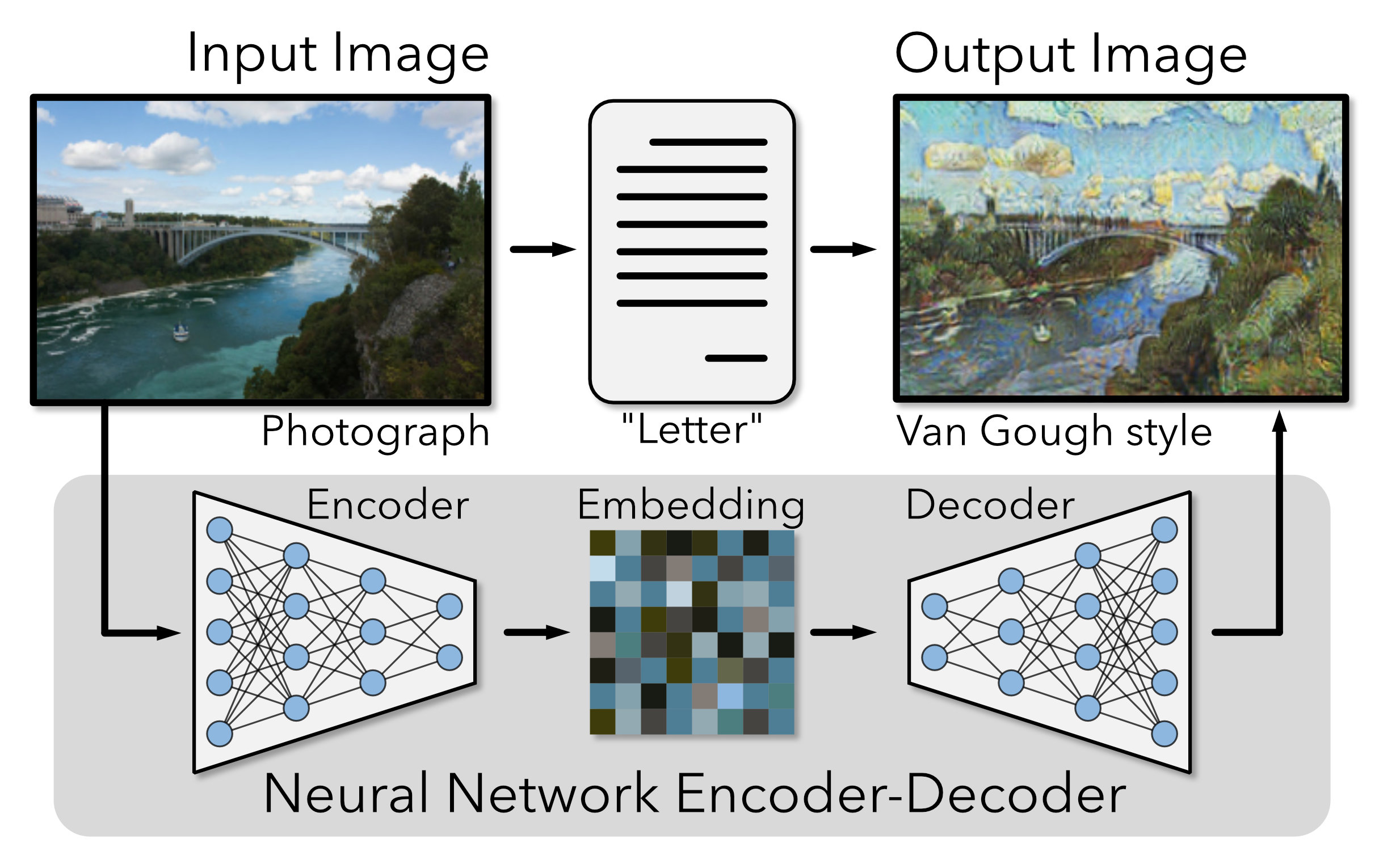

Image-to-Image Translation and pix2pix

Perhaps the biggest milestone in “modern” image-to-image translation was the Image-to-Image Translation with Conditional Adversarial Nets paper, more commonly known as pix2pix, by Isola et al. The paper includes some incredible visuals, such as colorizing black-and-white photos and generating realistic photos of handbags from edges:

To someone without experience in the field, the translated images are almost so compelling as to be unremarkable, yet it’s hard to overstate how exciting these results were when the paper first came out. These results were a big deal at the time, and has spawned significant research since its inception: the pix2pix paper has over 1,000 citations as of the time of this writing, and nearly every paper I discuss in this post cites it as foundational research. pix2pix has also inspired a number of interactive online demos, including edge2cats, in which the algorithm will try its best to produce photos of cats from user-drawn sketches. Since the release of pix2pix, other papers have improved the quality and size of the converted images, including the aptly-named pix2pixHD from NVIDIA.

I would recommend taking a look at the edge2cats demo. Some of the results are hilarious: #[image===edge2cats-demo]#

I would recommend taking a look at the edge2cats demo. Some of the results are hilarious: #[image===edge2cats-demo]#

Unsupervised Translation and CycleGAN

As exciting as pix2pix is, it still requires “image pairs” to train the algorithm: i.e. if you want to convert daytime photos to nighttime photos, you will need hundreds or thousands of corresponding daytime and nighttime photos from the same vantage point, which is why that dataset from the pix2pix paper was drawn from timelapse photos of nature-facing cameras. The CycleGAN paper, which I quote above, introduces a technique to overcome this limitation. Instead of requiring image pairs, the CycleGAN technique only needs two large batches of images: one from each of the target distributions, or languages, of interest.

There are indeed some technical details I am leaving out for brevity and accessibility, but I highly encourage interested readers to look at the CycleGAN paper, which is quite well-written.

There are indeed some technical details I am leaving out for brevity and accessibility, but I highly encourage interested readers to look at the CycleGAN paper, which is quite well-written.

The CycleGAN algorithm is designed to recognize common features of each set of images, like how snow is more common in Winter photos and lush grasses are common in Summer photos. As it learns these common features, the algorithm learns to introduce these characteristics during the translation process in an effort to produce realistic images. One of the most striking examples from the CycleGAN paper was horse-to-zebra translation. By providing the algorithm with a few hundred pictures of horses and a few hundred pictures of zebras, the algorithm learns to translate between the two sets and to add stripes to horses as part of the transformation.

So why is this exciting? Because pairs images can be difficult or even impossible to obtain. For example, I would be hard-pressed to find a photo of San Francisco in which there were a foot of snow on the ground, however, I can imagine what such a photo might look like because I have an understanding of what cities look like when it snows. CycleGAN, and some similar work from NVIDIA, has enabled research in other domains as well. For example, researchers from Oxford augmented the CycleGAN algorithm to enable better place recognition across times of day, allowing them to more accurately determine the location of their self-driving car at night with only daytime images to compare against.

In a paper of mine from last year, I use CycleGAN to make simulated images more realistic and then use the resulting image data to train a robot. #[image===genesisrt-sample-images]#

In a paper of mine from last year, I use CycleGAN to make simulated images more realistic and then use the resulting image data to train a robot. #[image===genesisrt-sample-images]#

As you may have guessed, unsupervised and “semi-supervised” translation techniques have also shown promise for text data. Researchers at Google are doing some incredible work on what they call zero-shot Translation. In short, the researchers train a machine learning system capable of translating between many different languages and find that the system eventually learns to translate between languages for which no direct training data was ever provided to the system. In the example they gave, they trained the system to translate English⇄Korean and English⇄Japanese; the resulting trained system is then capable of reaching nearly state-of-the-art performance for Korean⇄Japanese.

The completely unsupervised approach to language translation has just begun to yield some interesting results, as some researchers from Facebook AI just published some remarkable performance numbers on “low resource” languages in Phrase-Based & Neural Unsupervised Machine Translation.

The completely unsupervised approach to language translation has just begun to yield some interesting results, as some researchers from Facebook AI just published some remarkable performance numbers on “low resource” languages in Phrase-Based & Neural Unsupervised Machine Translation.

To those who speak three or more languages, this result may seem almost trivial. However, the idea that the algorithm is capable of learning a representation of human communication that is language agnostic is an incredible accomplishment for the machine learning community. With this advance, Google Translate now includes more language pairs, since this work has enabled translation to and from “low resource” languages, for which bilingual training data is sparse.

Video-to-Video Translation and vid2vid

By now, you probably have a good idea what this section will be about: the recent vid2vid paper from NVIDIA enables high-quality translation between videos. The accomplishment here is as much an engineering one as it is an advancement in the state-of-the-art. The key insight in this paper is in the way the learning algorithms are trained. The team at NVIDIA begin by training the algorithm for single-video-frame translation for low-resolution downsampled video frames. As training progresses, they gradually increase the complexity of the problem by slowly increasing the resolution of the converted frames and adding more frames to be converted at once. By asking the algorithm to begin by solving a much simpler version of the problem and layering on difficulty, the training process is much more stable, and results in much higher quality output.

The training procedure for the vid2vid paper is very clearly inspired by other work from NVIDIA, including pix2pixHD and the “Progressive Growing of GANs” paper.

The training procedure for the vid2vid paper is very clearly inspired by other work from NVIDIA, including pix2pixHD and the “Progressive Growing of GANs” paper.

Image Captioning

Why should we limit ourselves to translating between input output pairs of the same data type? Image Captioning is the process of generating a sentence to describe an input image. The learning problem for captioning an image shares the same encoder-decoder structure I described above. Yet, as you can imagine, the structures of the encoder and decoder blocks are indeed very different from one another, since the format of the input and output data are so different. Here, the encoding block takes an image as an input while the decoder block takes the resulting embedded representation output and decodes it into a sentence. Because of the way the system is designed, every component in the system can be jointly optimized: the encoder, decoder and embedding representations are all updated in parallel during training to best fit the data.

The landmark work in automated image captioning was first presented by Google in 2014:

Researchers are also interested in the inverse to the image captioning problem, generating an image corresponding to a sentence. However, language is notoriously vague, so live demos of image generation techniques like AttnGAN have been the source of some humorous failure cases: This AI is bad at drawing but will try anyways.

Some final thoughts

This list is by no means complete: there is too much interesting research for me to have included everything. Many types of problems fit into the translation paradigm. The encoder-decoder architecture I have spent much of this article talking about has proven extremely flexible. I am constantly surprised at how clever the machine learning community is at reusing learned encoder blocks for dimensionality reduction: the embedded representation output from the encoder is typically smaller in size, but should contain most of the “important” information, enabling further learning algorithms to be trained on this intermediate representation.

As always, I welcome discussion in the comments below or on Hacker News. Feel free to ask questions, or let me know of some research I might be interested in.

References

- T.-C. Wang, M.-Y. Liu, J.-Y. Zhu, G. Liu, A. Tao, J. Kautz, and B. Catanzaro. Video-to-Video Synthesis. arXiv:1808.06601, 2018.

- J.-Y. Zhu, T. Park, P. Isola, and A. Efros. Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks. In ICCV, 2017.

- P. Isola, J.-Y. Zhu, T. Zhou, and A. Efros. Image-to-Image Translation with Conditional Adversarial Nets. In CVPR, 2017.

- G. Stein and N. Roy. GeneSIS-RT: Generating Synthetic Images for training Secondary Real-world Tasks. In ICRA, 2017.

- T.-C. Wang, M.-Y. Liu, J.-Y. Zhu, A. Tao, J. Kautz, and B. Catanzaro. High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs. arXiv:1711.11585, 2017.

- M.-Y. Liu, T. Breuel, and J. Kautz. Unsupervised Image-to-Image Translation Networks. In NIPS, 2017.

- M. Johnson, M. Schuster, Q. Le, M. Krikun, Y. Wu, Z. Chen, N. Thorat, F. Viégas, M. Wattenberg, G. Corrado, M. Hughes, and J. Dean. Google’s Multilingual Neural Machine Translation System: Enabling Zero-Shot Translation. arXiv:1611.04558, 2016.

- G. Lample, M. Ott, A. Conneau, L. Denoyer, and M.’A. Ranzato. Phrase-Based & Neural Unsupervised Machine Translation. arXiv:1804.07755, 2018.

- T. Karras, T. Aila, S. Laine, and J. Lehtinen. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv:1710.10196, 2017.

- O. Vinyals, A. Toshev, S. Bengio, and D. Erhan. Show and Tell: A Neural Image Caption Generator. arXiv:1411.4555, 2014.

- T. Xu, P. Zhang, Q. Huang, H. Zhang, Z. Gan, X. Huang, and X. He. AttnGAN: Fine-Grained Text to Image Generation with Attentional Generative Adversarial Networks. arXiv:1711.10485, 2017.